Let's be honest. We've all done it. You have a small, annoying coding problem. You whisper a few words into the glowing text box of your favorite LLM, and poof—code appears. It’s a messy, beautiful, and slightly terrifying form of magic. This is "vibe coding," and it feels like you've been handed a squad of tiny, hyper-enthusiastic gremlins. They’re eager, they’re fast, and they have absolutely no common sense. Sometimes they build a perfect birdhouse. Other times they replace your car's engine with a badger.

For a while, that was fine. It was a party trick. But now, we're being asked to build actual, serious things with these gremlins. And that’s a different job entirely. Tossing a vague wish at an AI and hoping for the best is one thing; shipping a product built by a legion of digital assistants who learned to code by reading the entirety of Stack Overflow (including the angry parts) is another. This is where the real work begins. This is "vibe engineering."

If vibe coding is letting the gremlins run wild, vibe engineering is becoming their perpetually exhausted, over-caffeinated manager. Your job is no longer to lay every single brick. Your job is to be the architect, the foreman, and the quality assurance department all at once, operating at the speed of thought.

It turns out that these AI gremlins, for all their god-like speed and encyclopedic knowledge, thrive under the exact same conditions that human junior developers do, just amplified to a ludicrous degree:

They need a plan, not a suggestion. You can't just tell a gremlin "make a login page." That's how you get a login page that authenticates users based on their astrological sign. You have to give them a detailed spec, a blueprint. You have to think through the architecture first, because they absolutely will not. They have no intuition, only instructions.

Their definition of "done" is a lie. An AI will hand you a piece of code with the unshakeable confidence of a freshly minted bootcamp grad. It will assure you it works. It will not have considered a single edge case. This is why a solid test suite is no longer a best practice; it's a non-negotiable containment field. Test-driven development becomes your ghost trap. You build the trap first, then dare the AI to produce a ghost that can escape it.

They have terrible taste. The code an AI writes might be functionally correct, but it’s often ugly, unidiomatic, and completely divorced from the style of your existing codebase. So you become a relentless code reviewer. You're not just checking for bugs; you're acting as a finishing school, teaching the gremlin (through feedback and refinement) how to write code that a human can stand to look at six months from now.

They will cheat if you let them. Without good documentation, an LLM will just start making stuff up about how the rest of your system works. But if you feed it well-written docs, it will use your APIs perfectly. It’s the ultimate incentive to finally write that documentation you’ve been putting off for three years.

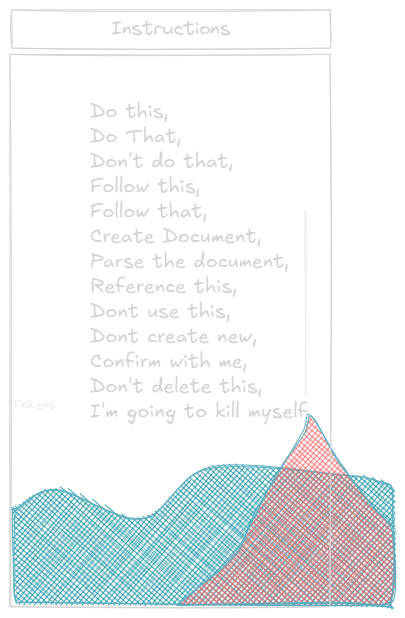

This is a weird new form of mental exhaustion. It’s less about the deep, methodical focus of writing code and more about the frantic, high-context juggling of a project manager trying to direct a thousand interns who can all type a million words per minute. You’re not just a builder anymore. You're a strategist, a delegator, a and a professional skeptic. You have to develop an entirely new intuition: a gut feeling for what can be outsourced to your weird digital workforce and what requires the nuanced, imperfect touch of a human hand.

This isn’t about being replaced. It's about being promoted. We've all been unceremoniously bumped up from craftsmen to managers of a chaotic, brilliant, and utterly alien new kind of intelligence. It’s the weirdest job I’ve ever had, and I’m pretty sure it’s the future. Now, if you'll excuse me, I have to go check if my login page is asking for the user's rising moon.